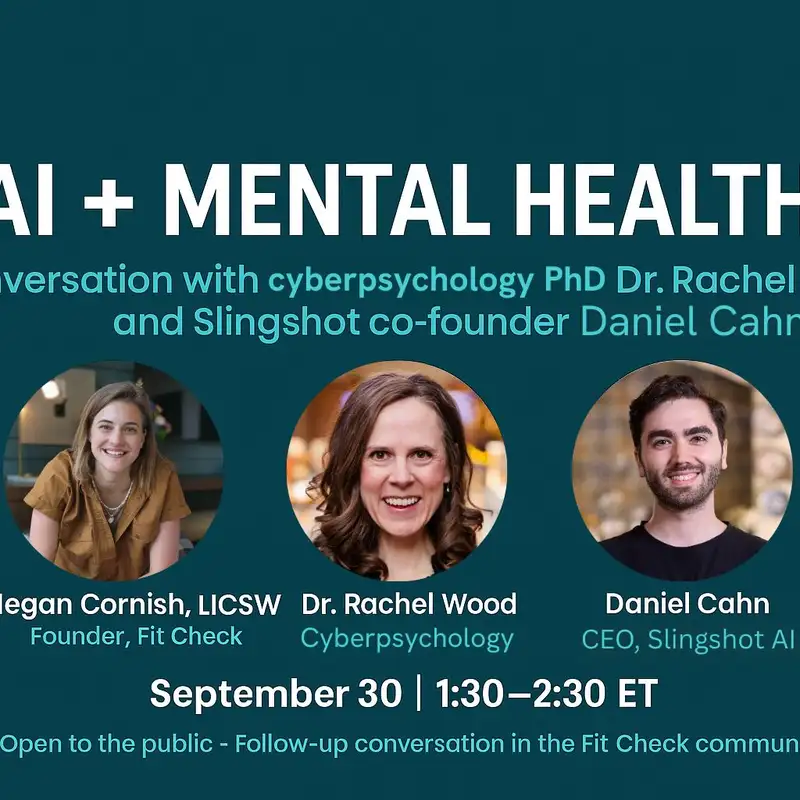

AI Therapy: An Open Conversation with Therapists

All right. So I just wanted to say thank you to everyone for joining us for this conversation and to Daniel and Rachel for being willing to have it with me. It kind of came up because all of the conversations that are happening on LinkedIn and in podcasts and on Facebook groups between therapists, AI is a big deal in every industry, including our industry. And on top of questions like privacy and encryption and all those things, there's also a lot of philosophical conversations that need to happen that I've been lucky enough to have with many therapists and wanted to just kind of host kind of a deeper conversation with it. So just to start, I just wanted to give both of these two of my experts just the chance to introduce themselves.

Daniel Reid Cahn:Okay, thanks.

Dr. Rachel Wood:Daniel, good to see you. Hi. I am Rachel Wood. I'm a licensed counselor. I have a PhD in cyber psychology.

Dr. Rachel Wood:Everyone is like, what is that? It is essentially the scientific study of the digitally connected human experience. So that's my wheelhouse is AI and AI and mental health. I'm also recently the founder of the AI Mental Health Collective, which is bringing together both clinicians and other stakeholders to have conversations like this. So Megan, I'm so just grateful that you invited me.

Dr. Rachel Wood:Thank you.

Daniel Reid Cahn:Awesome. And I'll just introduce myself quickly. I'm Daniel. I'm a machine learning engineer. I've been in AI for about ten years.

Daniel Reid Cahn:My dad's a psychologist. My mom's a social worker, so I've been in this mental health world a long time. I did my undergrad in philosophy, focused on philosophy of psychology, postgrad in AI, postgrad research in AI for mental health. I worked at the time with a crisis organization that was helping about three million people through mental health crisis, mostly around suicide. And the big problem, which is still a problem, is just a massive shortage of access to people who can help.

Daniel Reid Cahn:At the time, we were trying to figure out if AI could help. At the time, it could not. The technology just wasn't there. About, I guess, just under two years ago, I met my co founder, Neil, who previously founded Casper. And Neil had the very different story, but similarly had come to this problem of access to any kind of mental health support.

Daniel Reid Cahn:And we both realized, you know, this has been a problem for a long time. In 1963, JFK gave this address to Congress on the problem of access to mental healthcare and the problem that there's a massive shortage of mental healthcare providers. So if it's been happening since 1963 and we're still talking about the same thing today, we probably need a new solution. So we thought maybe AI can finally help with this. And we kicked off this project to build out a model specialized for psychology.

Daniel Reid Cahn:We spent about eighteen months developing the model, and that was in partnership with behavioral health organizations with quite a few experts, including Tom Insul, the former head of the National Institute of Mental Health, and quite a few other leaders that have been involved at the time. I would also just give some context. Obviously, I can't speak for everyone in the world of AI, but, you know, we've built ASH in some ways very similar to the way that a lot of other technologies developed, in some ways very different. ASH is trained on a specialized dataset, so it, you know, will behave quite differently. I I just wanna make sure for context of this conversation that we're not you know, I'm not gonna pretend to speak for everyone in the world.

Daniel Reid Cahn:But the big things are just, like, we've focused on building a model that's not general purpose, one that's specialized, one that's focused on being able to challenge people and not just validate people, and one that can actually help improve real world relationships. So that's a bit about me talking quite widely, you mentioned philosophy. I also had mentioned I did a podcast for a while on AI and philosophy, and that's one of my favorite topics to talk about.

Megan Cornish:Awesome. Thank you so much. I put out the call to therapists to submit questions for this. I got a lot of responses after prompting. About half of them were just specifically about ASH, and which is not the intention of this conversation.

Megan Cornish:I would be happy to host, like, a I don't wanna call it a debate. To find a kind, smart AI skeptic to host a conversation with you about ASH. That's not the intention of this, but I did wanna just give you the opportunity to answer the most common question I got, get it out of the way, which is you've described ASH as designed for therapy, but most therapists were asking why the language of therapy when it's not licensed, regulated, or held to the same standards. So how do you answer that concern?

Daniel Reid Cahn:It's a great question. And by the way, funny you mentioned AI skeptics. I think you'd be very surprised that our team tends to be almost entirely AI skeptics, including the people who are working on building the AI. So we are we are that interesting environment, actually, a lot of the folks here. It's a good question.

Daniel Reid Cahn:I think there are kinda two parts to it. The first being, should AI therapy be regulated? Yes. Just to say the obvious thing, like, yes, 100%, of course. We've spent a long time building this, you know, and making sure that we're careful using a clinically relevant dataset, working with experts, developing layers of guardrails.

Daniel Reid Cahn:I mean, I think there's no question from our side that, yeah, it should be rules here. On the question of why it's called AI therapy, it's really funny. I have been looking for you know, we've been constantly looking for, like, what do we call it? For a while, we didn't call it AI therapy. For a while, we call it AI therapy.

Daniel Reid Cahn:I would say this is still like a big open question for us of what we call it. The biggest things that people come to talk to Ash about are relationship issues, family issues, self esteem, meaning, you know, self worth, grief and loss. If you know, these are the kinds of so we've tried other kinds of names. We've tried calling it, like, coaching. We're trying to identify, like, what do we call this thing that applies to a vast swath of the population who are probably not in a category that would be supported by our current clinical system, right?

Daniel Reid Cahn:They are not gonna have a diagnosable disorder, but they are dealing with the kind of problems that we all have that they could really get some help with. We tried calling it coaching. The problem was that these kinds of problems are quite vulnerable, and it can be really hard to talk about, honestly, some of these vulnerable topics with this very positive connotation of coaching, which had counseling, but it's not really about giving advice. It's much more about allowing people to open up and figure things out and develop a sense of their own ability to do things. We tried, it's funny, one big therapist we talked to, I talked to it last Friday suggested emotional concierge.

Daniel Reid Cahn:Concierge. I think it could be promising. It also seems a little bougie. So anyway, would just say, like, the reason why we've called it therapy is this year, Harvard Business Review announced that AI therapy is now the number one consumer use case of AI. They use the term like this is therapy usually is like just a thing that people are talking about when they're talking about using chat GPT to talk about emotional topics.

Daniel Reid Cahn:We've struggled to communicate effectively what this thing is. It's obviously very different. It's obviously very different than the thing that, you know, human therapists can do where they can actually be physically present and with the person and, you know, share an energy with them in this kind of way that you do. But we also wanna make sure that people can feel like they can have that vulnerable conversation, that they don't have to be perfect, that this doesn't have to be about reaching for the stars all the time. Therapy seems to be the one that resonates with people.

Daniel Reid Cahn:I have no idea if we're gonna call it therapy in six months. I'm not at all married to the term, and I would love any ideas and suggestions from the community.

Megan Cornish:You heard it here first. Maybe I'll put a post up and see what the survey says. I think that you're describing a pretty familiar idea, which is that there's this space below therapy where there's mental health concerns, but don't necessarily prompt the person to seek a life therapist. I think historically those people have been called friends, but we're gonna talk more about that in this conversation and how we can reintegrate community into mental health care and how we can make sure AI is not undermining that. So I'm going to switch.

Daniel Reid Cahn:I'm going to give you a little

Megan Cornish:break from talking to Danielle. I'm going ask Rachel to start with us. Rachel, can you talk just a little bit about what happens in our brains during a therapeutic conversation and how that may be different when talking to a human therapist just kind of like the psychology of connection that we're talking about when we're talking about AI conversations.

Dr. Rachel Wood:Yeah, so let's look at it from the lens of attunement So emotional attunement is essentially what happens when you're sitting with a therapist and this is when someone is kind of leaning in to your internal state and they are showing you or mirroring that they understand you And you know, you feel awesome because you feel seen and heard and understood and it is a beautiful thing. And so the way that that plays out in the brain and the body is that first of all, body takes this as a cue of safety and so the body can shift out of sympathetic nervous system fight flight freeze and fawn and can really move into this relaxed parasympathetic state of safety. Also in the brain, a number of things happen, Megan, but particularly like neurological resonance, which is really crucial for learning. So it kind of amplifies our ability to learn new patterns and new ways of being. Also, it's gonna activate our prefrontal cortex, and this allows us to do things like exercise neuroplasticity, which is that cool thing that allows us to like build new neural pathways and understand things in the world in a new way and relate in a new way.

Dr. Rachel Wood:So in a therapeutic interchange, this is happening during the interchange and then also is going to carry over to help build our kind of distress tolerance and our self regulation as we move on.

Megan Cornish:And as a follow-up, do we know much about how the brain responds to AI conversations or is that research still kind of ongoing? Do similar processes happen in the brain or like what's those interactions like? What are those interactions like?

Dr. Rachel Wood:Yeah, I mean Daniel might want to speak to this as well. We are seeing studies coming out, of course we don't have longitudinal data yet but we have studies of kind of AI and attachments and what this is showing so far when we look through the lens of attachment theory is that indeed people are attaching with their own kind of individual attachment style to AI in the same way that they would with a human. Daniel, I'm sure you have some additions.

Daniel Reid Cahn:Yeah. No. I was just gonna say we have so we're doing research right now with NYU and with Stanford, and it has been largely to answer this question. So we've we've had some good early results already, and I can talk a lot about that in terms of demonstrating the effect of Ash in particular, which I wouldn't say is the same as necessarily an AI that's built to be a companion. You know, like, one of the things that was most surprising in our research on ASH was that ASH, or maybe not surprising, but definitely different than general purpose LLMs built to be companions, is the way in which it does strengthen real world human relationships.

Daniel Reid Cahn:And so you have this kind of question of, like, what is the mechanism? Mechanism? What is happening there? Is it a matter of encouragement? Is it attunement?

Daniel Reid Cahn:Are we building a therapeutic alliance in a similar kind of way as we would with a human? I don't want to pretend that we have all the answers. We don't. We're sharing the results as we get them, which is, you know, coming out. Like, it's we're still quite early on this.

Daniel Reid Cahn:But a lot of the questions are questions that we're gonna need to ask, which is basically, like, what are the mechanisms of change in the context of AI? I think it's possible that it's quite similar to a human. I think it's possible that it's radically different. I think we should ask both the question of, like, what is the effect? And if we can show that it is strengthening our social bonds, like, that's awesome.

Daniel Reid Cahn:Whether the whether the mechanism is exactly the same or completely different. But, you know, just from the philosophical mind, I I am really curious, like, how all that happens. I have no idea about attunement in the context of AI, but I will say that that's an active area of research.

Megan Cornish:Good. As it should be. Daniel, I am blessed. I've worked with a lot of AI companies and I've gotten to research and talk and ask all my, you know, uneducated questions. So I feel like I have a pretty good grasp on the building of LLMs.

Megan Cornish:But how I would love to have you explain, like, how you explain it too, especially even non therapists of how it works. And I wanted to note something interesting. I've been browsing the I don't know if you know this. There's a AI is my boyfriend subreddit, and people share about their relationships with their AI companion. You mostly chat GPT.

Megan Cornish:But even within that subreddit, there's a strong rule that you're not allowed to ascribe to AI emotions or feelings or free will even in the way you talk about your relationships. And you can get banned from the sub if you break that rule. These are people who are very supportive of AI relationships who are who are very careful to make sure that nobody is saying that their AI has consciousness of any kind, which I thought was really interesting. So could you talk about, like, what's what's behind this, you know, this AI?

Daniel Reid Cahn:Alright. So first, I mean, there's the question of, like, what is AI? What are LLMs? I think just on the consciousness, free will points, I could talk about this for hours. So that's that's maybe a much deeper one.

Daniel Reid Cahn:But I would just say, like, well, I could I could easily go on hours just talking about self supervised learning and the way in which language models are trained. But I think the big important points are language models at their core are trained on an architecture called a neural network, which was a thing that people had posited fifty plus years ago as this crazy, what if we were to actually try to design a computer system that works like a human brain? And it was kind of this crazy idea. And I think the shocking part is that it worked, right? Like we actually, language models are actually trained with this computer architecture that is modeled after the brain.

Daniel Reid Cahn:It's trained on an enormous amount of data. There are actually two stages, basically, in training language models that we use today. The first is called pre training, and that's where the model gains its capabilities. You start with, like, a blank empty neural network, and then you have it read a lot of data and learn for itself from that data. For most models like ChatGPT, that is the Internet that it's basically reading.

Daniel Reid Cahn:In our case, we train a little bit differently because we train a specialized dataset that we've built out through partnerships. And the second step is basically what we call alignment, which is where the company that builds the model typically will, like, choose what they're trying to achieve. And for most models out there, they're assistants. So the core goal is called instruction following, and it involves having the model learn to take in an instruction. You know, how tall is the Eiffel Tower?

Daniel Reid Cahn:Please give me the correct answer. In our case, we have a very different kind of alignment step because we do use experts, and we're specifically aligning to have helpful long term trajectories. So whereas with a general purpose assistant model that's aiming to solve your problem in the next message to give you the answer, our model is trained to create helpful trajectories, lead towards good outcomes, good conversations that specifically drive autonomy, competency, and relatedness, which we could dive into in more depth. But in practice, what that means is for an assistant, if you were to say something like, you know, I feel angry, is an assistant that's trying to solve a problem, so it'll say something like, here are three things that you can try, kinda like a Google style answer. If you talk to Ash, it's much more likely to say something like, so, you know, why is anger a bad thing?

Daniel Reid Cahn:Like, what what's the problem? And it's not that there is or isn't a problem. It's that it is trained based on trajectories, and so it is trying to open up a good conversation that might lead you to be like, of course, it's a problem. It keeps getting in the way of my life. It's like, okay, this is really interesting.

Daniel Reid Cahn:Let's talk more about that. I can go on for hours talking about how LLMs work, but I think those are the big fundamental pieces, which is just we take this bottom piece, which is just like an architecture modeled after a brain. We train it on huge amount of data. We align it for a specific purpose. And the process is very much fundamentally different than a human.

Daniel Reid Cahn:So yes, I think though it can behave in a way that is intentionally very similar to us because it trains on our data, it's obviously quite different. But again, I don't know if that gets at the whole consciousness thing.

Megan Cornish:Yeah. Well, and you know what? I don't expect you to be able to tell me whether AI has consciousness or can't have consciousness. That's still above all of our pay grades. So you're you're describing a system that is really good at talking, which is, pretty much what it is to understand and then and then Well It's it's a language model.

Megan Cornish:Right?

Daniel Reid Cahn:I think there's a piece that people sometimes miss here, which is an assumption that because it's trained on language, that it's surface level. And I don't think that is the right interpretation. So the classic example here is, like, if you train a language model on an Agatha Christie novel and, like, at the very end, we find out who who was the murderer, the model does need to predict who the murderer was. And the way that you do that is not by being surface level and just knowing how to spout words. It's by going deeper and understanding for itself like a mental model of what's happening in the book to be able to make appropriate predictions.

Daniel Reid Cahn:So I think the thing I would say is, like, it is language and how it interacts. But I think there are also really strong cognitive science theories that basically rely on language as a fundamental way that our brain works. And so there is this way in which, what if language is the way that it learns, but fundamentally, it really seems like there is something deeper, still language, but it doesn't mean that it's kind of like just language. Like language actually is an extremely deep thing.

Megan Cornish:It's, yeah, language is how we connect ideas. The reason why I said the word talking is because originally therapy was conceptualized as a talking cure. That's what four H called it. Eventually, we came to understand it differently as more of a well kind of split, but more of a relationship cure. But Rachel I'd love to hear like any reflections you have about LLMs versus like you know the nature of therapy.

Dr. Rachel Wood:Yeah I do think that the issue isn't really whether AI will ever be conscious. I think the issue is how people behave when they believe it already is. And so we're seeing that in terms of like these subreddits, and I'm I'm kind of deep into the understanding of how people are marrying their AI. I mean there's a whole road down there and I just want us to be mindful that all of this isn't future this is all happening right now. Like we are now talking about hundreds of millions of people who are using AI for emotional support.

Dr. Rachel Wood:I mean, ChatGPT has 700,000,000 weekly users, and, you know, we referenced the Harvard Harvard Business Review that companionship and therapy is the number one use in 2025. Also, Centennial University just came out with a study that AI may be the largest provider of mental health support in The US right now. This is staggering. So I think our conversation gets to shift from should this be happening or not to let's get ahead because this is already the reality. And instead of going from hundreds of millions of people using it, we're gonna be seeing billions of people using it.

Dr. Rachel Wood:How do we build AI that is going to be safe and supportive for that kind of context? Right now when we look at general purpose bots like ChatGPT they aren't built with kind of this underlying safety guidelines and guardrails for mental health support. Sam Altman has said this OpenAI is like don't use this for for mental health support and they're now trying as we see to band aid on top of it some of these guidelines and guardrails. This week OpenAI has said they're going to build a network of therapists in order to kind of help with what's going on in terms of the way people are using chatty PT. So I'm of the mindset that we should be, especially as mental health experts, really helping create AI that is fit for purpose and what that means is that it's going to be used in a way where there are crisis protocols in place, where it's trauma informed in order to give kind of body based prompts that help de escalate people, you know where there's a one touch access to a human hotline, things where you know we can do cultural like competence and bias mitigation.

Dr. Rachel Wood:I mean I could go on and on in terms of the way I think that these should have certain ethical protocols in place but it's really important for us to differentiate a general purpose model and a fit for purpose model because if people are already doing this, I believe they should be using the ones that are going to be trained with some guidelines and guardrails in place.

Daniel Reid Cahn:Couldn't agree more. I think the other thing I would just add, I do wanna push like, given the opportunity to the audience, I wanna push this topic a lot further. I think, to be honest, I agree with everything that you said, I think, Wood. But I I think my concern is actually and I think, Megan, you've gone at a big concern around relatedness, just connection to other people. The three concerns that we have, that I have, that, you know, I had in founding this company, were autonomy, competency, and relatedness.

Daniel Reid Cahn:And if you guys know that's from self determination theory. But I think the three of them are exactly the things we should be concerned about with AI from my eyes. Like, I think those are the biggest things. And this goes well beyond the, like, how do we handle people in crisis. This is the autonomy on the side of if you have something in your pocket that can always make decisions for you, you do learn to defer decisions to the AI.

Daniel Reid Cahn:You do become less autonomous. You can imagine if you went to your therapist and you said, you know, should I break up with my boyfriend? And they were like, yeah. He's toxic. You should leave him.

Daniel Reid Cahn:That would be a bad therapist because they're taking away the person's autonomy. But at least you're limited to only seeing them on some cadence. If you could actually pull that out at 2AM and get that answer and break up with your boyfriend, you really lose that sense of autonomy. You lose that sense of competency, and I think this is something I've also heard quite a bit from therapists, which is the if I say you should break up with your boyfriend, I'm also taking away your sense that you are the best placed person. Maybe the better answer would be something like, you know, you're actually the best placed person to make that decision.

Daniel Reid Cahn:You're the expert in your own life. You know your situation. I wanna support you, but there is no right decision here, and I'll never know as well as you do. AI is a genius. Right?

Daniel Reid Cahn:There is this sense that you're talking to an emotional genius, and there is a way in which you increasingly feel inferior, not just on the mental health aspect, but also, you know, if you're a fifth grader and it can write your essay better than you can. And on relatedness, it's, you know, perfect if if it's constantly validating you and it's the perfect friend, never talks about itself. Like, of course, there's a huge threat that it replaces people. So I think from my perspective, those are the biggest three things that I wish this conversation was always about. I want people to be constantly thinking about autonomy, competency and relatedness.

Daniel Reid Cahn:And what I completely agree with you, Doctor. Wood, is it feels like a band aid when we're talking about the questions about escalation. When I worked in that space of escalation for the longest time, we are massively under resourced. We work right now with an organization in The UK that has chat GBC referring people to them and they can't handle the load. And they are just frankly like, we don't have enough people to handle all this.

Daniel Reid Cahn:We spend our time with insurance companies, with employers that are just like, how am I supposed to give access to all these people? We've been really fortunate. We've been super happy to see how many people have used Ash and then went to therapy and have been able to go through a process where they're able to identify what kind of problems do actually need people or what kind of problems do benefit from talking to your parents, talking to your friends. I think that AI can be intentionally built with these three values. That's what we've set out to do very explicitly.

Daniel Reid Cahn:These are the three things that we said from day one are the core values that if we achieve nothing else but can help people feel an increased sense of autonomy, competency, and relatedness, we've achieved our goal.

Megan Cornish:Yeah. I think this autonomy question is really a big one, and I had sent you a list of questions in advance. But this morning, I added one because NPR had an article this morning about AI and therapy, and it led with a story about a woman whose therapist stopped taking insurance. So her co pays were $30, then she was gonna have to pay $250. So she turned to ChadGPT, and she told NPR that now when she wakes up from a bad dream in the middle of the night, she can just talk to her therapist on chat her therapist chat GBT, and that's something that no human can give, which is intentional because boundaries are very important.

Megan Cornish:And how are you I I would love to know how AI should be and how you are thinking about intentionally limiting AI, which, to be honest, using the even using the word therapy kind of rubs therapists the wrong way for that reason. Think that our conceptualization of what AI is stays in its lane.

Daniel Reid Cahn:Just to say, I think that's fair. We have we've been experimenting. We're, of course, you know, early I would mention we've taken away the word therapy. We experimented with it for a while. I I think that you're right.

Daniel Reid Cahn:I think it it sets some interesting questions, which is like what this might but just getting to your it's a great question. So one thing I heard just I think this was yesterday, someone reached out to me. They gave us some people and they were like, I was having a lot of trouble sleeping. I reached out to Ash in the middle of the night and Ash said, Okay, what's going on? And then I dumped out all the things in my mind.

Daniel Reid Cahn:And then Ash said, Got it. Maybe you can get some sleep and we'll talk about it tomorrow. And they were like, This thing really helped me sleep. And I think that kind of like added so I mean, the main thing that happened there, just to be clear, was Ash kicked the person out. And that has been an approach that we've taken.

Daniel Reid Cahn:In the early days when we first got started, we had a timer on sessions, and we had the idea of scheduling and a frequency and all that stuff that makes sense with people. None of that makes sense with AI. Conversations with an AI are radically different. They are not the same conversations. You know, you hear classically, like, people open up after, like, eight to 10 sessions with a therapist.

Daniel Reid Cahn:With ASH, people open up usually in the first five minutes. So we have a lot of people where ASH says, Hey, ready to get started? And he's just like, Yes, by the way, I'm gay and I've never told anyone. It's different, Right? You're not taking the same risk as you would with a human.

Daniel Reid Cahn:You know it's not judgmental. Your problem's not gonna be solved by having said that out loud for a second. But something opened. This is a very different place for this technology and where it functions. On drawing limits, though, to specifically answer the question, yeah, conversations should not go on forever.

Daniel Reid Cahn:And the approach that we've taken is to try to identify based on the conversation dynamically. It's not a constant thing. Sometimes it's five minutes in if it's in the middle of the night. Sometimes it's an hour in. But get to that point where you say, you know, it seems like we've talked about a lot today, and I would love for you to get a chance to, like, integrate this into your life, to talk to other people, honestly, to get some sleep.

Daniel Reid Cahn:I mean, even just sleep is a major aspect of neuroplasticity and change. You need to sleep. You're not gonna stop your whole thing in eight hours straight. And so Ash might say, let me just ask you one more question and let you go.

Dr. Rachel Wood:I'd love to jump in here, particularly on the agency issue. I know we went a ahead of that but I was like, I have many thoughts here. Okay, let's back up and realize why this idea of agency is foundational for where society is headed Because if we don't get this right, our kids and their kids are going to have fundamentally different relational muscles than we do. So part of what can, let me go at this two different ways. First of all, this idea of agency is micro abdication after micro abdication can strip us of our own critical thinking and our ability to decide what we wanna do with our life.

Dr. Rachel Wood:And I think that particularly the younger generations are slightly more vulnerable to thinking, oh yeah, I'll just have Chachi B. Tell me what to do. And that this is gonna be really crippling for society on a large scale over time if that's the way that this heads. That's why I don't think that LLNs should give advice. I think they should solely support the decision making and the critical thinking of the user through things like questioning and hey, let me let's walk through a process of helping you come to your own decision that that support should be there as opposed to kind of a yes man answering machine.

Dr. Rachel Wood:The other thing here that's important is making sure that chatbots help practice relational skills as opposed to atrophy relational skills. And what I mean by that is you don't really have to practice patience or negotiation with an LLM. You know, you maybe text a friend, they don't get back to you for a day, like you have to be really patient. An LLM is gonna get back to you right away. So there's this erosion of some

Daniel Reid Cahn:of our

Dr. Rachel Wood:bidirectional relational skills that can happen over time. And again, that can really looking down the road at society be crippling. So what I like to see is when LLMs are actually helping us practice the hard things and then really ushering us back into the arms of other humans.

Daniel Reid Cahn:I would just add, I think you're talking about practicing with AI. I would say some of the we were talking before about mechanisms. I mentioned, like, one of our top results was just on average, we know that general purpose assistant models make people more lonely, more subjectively lonely, and also have fewer real world connections. And we also have seen that with ASH, people do have more valuable, meaningful connections in their lives. So what are the mechanisms there?

Daniel Reid Cahn:A lot of what we've pushed for or what we understand it to be so far has been practice, and it has been, I mean, some of it is behavioral activation just like encouragement. Some of it is building resilience and having, you know, like a, oh, you know, you went to the party, didn't have a good time. It possible that you can still go to a party tomorrow and you will have a good time? You know, and talk through those kinds of things, mindfulness. A lot of it is also just helping people build basic skills, which is to say, like, I hear that you really wanna have this conversation.

Daniel Reid Cahn:You know? And the person's like, yeah, but I have no idea how to have a conversation. And sometimes there are those tips like, well, let's start with this. How how do you feel? You know?

Daniel Reid Cahn:Maybe we can start with a sentence like, I feel and there are sometimes just, like, actual, very pragmatic ways in which the LLM, rather than serving as a, you know, we're gonna solve our problem right now, just serves to help people feel comfortable developing those real world relationships, you know, handling those moments. And if the conversation doesn't go into, let's try to figure out what's wrong with the other person behind their back. And instead, it's like, let's figure out how you can go over and approach that person. I still suspect so I just did this program, a few weeks ago where I sat in a room talking about feelings with, 13 other people for many, many hours. And I I I don't think that there's gonna be a replacement for that with AI, just to be clear.

Daniel Reid Cahn:I think that fundamentally, a lot of what you're talking about that I'm completely in agreement with has to happen in the real world. And I'm hopeful that we can have AI help people, encourage people, give them the skills such that they can have those really valuable interactions, maybe with a therapist, maybe with a group of people, maybe with their friends, maybe ideally with their community.

Megan Cornish:I think what you're describing here, Daniel, which is AI not as a alternative to a human therapist, but almost like a gateway therapist or an interim therapist

Daniel Reid Cahn:100%. Yes.

Megan Cornish:Is not necessarily something that mental health professionals would be opposed to. But again, like I mentioned earlier, the, you know, the idea that it might replace therapists is very alarming, and I think you understand and agree with that. So I I wanna ask, how can you know, we talked about ChatGPT announcing that they're gonna do a network of therapists, which is a

Dr. Rachel Wood:a good start. Well, just

Daniel Reid Cahn:be clear. Then there was a as far as I understood, it was more of, like, a leak about a discussion.

Megan Cornish:Oh, okay. So it wasn't even

Daniel Reid Cahn:official. Of course not. Talked to and there's extreme skepticism that this will ever happen. We looked into it, by the way, for ourselves, and it is extremely expensive. It would mean for our products that we would be enormously more expensive to run.

Daniel Reid Cahn:That's why we've never seen a mental health company take that approach. And I've extremely

Megan Cornish:skeptical that run a network? To have a

Daniel Reid Cahn:To be able to offer some sort of path where you can just, like, link into a therapist. Like, just have a therapist jump into a conversation or something.

Megan Cornish:Oh, gotcha. But, I mean, I'm working with the company right now to build out a directory, you know, where where therapists have self identified as, I'm available. I'm in your area. I can help with these issues.

Daniel Reid Cahn:And we also I love those. I'm extremely supportive, but we also have to acknowledge this is just incredibly hard. Like, I just meant, like, there is a problem and we need to address it, which is the problem of just making sure people have access to therapy. And I think one of the things that we have, yes, had had a lot of success with is, to be honest, not just a directory, but just talking people through what is the process of seeing a therapist. How would I know if they're a good fit for me?

Daniel Reid Cahn:And sometimes just telling people, I know that you went to a therapist and it was a bad fit. But you know, on average, people need to see, like, three therapists to find someone who is a good fit. So, you know, that's totally normal. And you know what? If they didn't get you, like, that's fine.

Daniel Reid Cahn:There were a lot of other people. There are a lot of other styles. You know, here's what the process might look like. Actually, like talking through those, I'm just mentioning, I think can be really impactful. I would just have a lot of skepticism on the idea of trusting a company like Chatuchi BT and expecting that they're gonna solve the shortage of mental health providers that we've had for sixty years.

Megan Cornish:Oh yeah, absolutely. But I mean, I have already spoken to a lot of therapists, well, not as many as there should be, who are like, all of a sudden I'm getting referrals from CHADGPT. So the idea that AI would serve as an on ramp for real life therapy is not, you know, it's already happening. I think the question that needs to be answered though is how, like what are the indicators that say that the problem is above the heads of an AI and how do we facilitate that connection to a real human? Rachel, I Yeah.

Megan Cornish:Hear what you think.

Dr. Rachel Wood:Yeah. I I think that part of where we're headed all in all this that's that's currently happening but going to proliferate in the future is more of a hybrid model. So in you know, and there's been studies done especially with Gen Z right now that they don't want only a chatbot and they don't want only a human therapist. They actually want both. So what they want to see is some sort of I see a human therapist and then this chat bot kind of supports me in between sessions.

Dr. Rachel Wood:This is why I think it's critical that we are having conversations with clinicians to understand how you can really engage in this type of conversation with clients in terms of clients. Many of your clients are already using ChatGPT or something or ash or whatever they're using they're using something like that and so how do we actually open up a conversation if somebody maybe feels they don't want to tell anyone that they're using this for emotional support We should be a safe therapeutic space to process some of the patterns that are arising in the chats with the LLMs and even

Daniel Reid Cahn:kind

Dr. Rachel Wood:of enlist the LLM to support the work that's gonna be done in between sessions like, hey, we we identified these patterns now in the next week, these are some very specific exercises that can support you growing in the work that we're doing.

Daniel Reid Cahn:I have some, you know, thoughts and feelings around this. I think part of it is like, you know, of course there is the picture and you're obviously clinical professionals and we should talk about what happens when people are in that space when they are accessing therapy. I definitely hear the question a lot of like, as a therapist, how should I handle the fact that my patient's using ChatGPT? All those kinds of, you know, how can I work well with this? I also think that somewhat this misses the bigger picture.

Daniel Reid Cahn:So in The US, best understanding of the numbers, everyone has different numbers, but if The US were perfectly evenly distributed, there would be about 1,500 people in need for every therapist. And I think there's the question of like, should we have more? Yes, we should have more. Yes, we should have more access. But there's also the question of like, should everyone be going to therapy?

Daniel Reid Cahn:And I really appreciate, Doctor. Wood, what you said on the, what are those indications that you should? I hear a lot from therapists when they talk about ASH, kind of, on my way to work, I pass 10 people every day where I'm like, I wish I could help this person. And I can't because, you know, I have a full workload, and the reality is I can't help everyone. And I also wonder, and I think this went to what you said earlier, Megan, also about the way in which we need to help people through community, which is just those questions of like, I don't wanna conflate necessarily those clinical use cases that we're talking about.

Daniel Reid Cahn:The kind of topics people talk to Ash about largely, like I mentioned, like the kinds of things talked about, things like grief and family and relationships, those are not necessarily the kind of things where we can have a mental health system where we say, You should be going to a therapist. The reality of it is our mental health system won't pay for it, right? We don't have enough therapists for it, but we also just a lot, the vast majority of those people won't have a medical diagnosis. Their insurance company is probably not going to cover therapy for what they need. And I think there's the question, there was a great article on Friday by the Hemingway report on just like the question of like, should all of those people actually be going to therapy?

Daniel Reid Cahn:Like actually, is that the solution? Think there's, I'm just mentioning this because of where we sit in the system, and I know there are other companies that I think are doing fantastic work with trying to work with therapists. There are questions about can you decrease the duration of therapy and have people come in prepared with the kind of questions that they wanna talk about or practice things in between sessions. Are there great hybrid models? But I also wanna mention, I think where we sit and where we think is incredibly important is for the vast majority of the population who just have access to nothing.

Megan Cornish:I think that's very true. And I think that most therapists, though not all, would agree that while almost everyone could benefit from therapy, that not everyone necessarily needs therapy. But I think I want to hold this to my original question, which is what responsibility do AI companies have to redirecting to a higher level of care, which comes with its own set of you know, questions. But also, I I think I would take as far as, you know, Dion, you said you supported regulation. Do you think that should be something that is required that ChatchiBT should be held responsible for not dispensing mental health advice and instead redirecting?

Daniel Reid Cahn:It's so funny. I spend a lot of time with the folks from, you know, the therapy networks, you know, the Alma, Spring, Headway, etcetera. And, you know, the people that are trying to build directories, you know, I think that this is like a far more Let me just start by saying this. We had someone recently who sent us a letter talking about domestic abuse. It was someone who came to ASH, and they were going through a domestic abuse situation.

Daniel Reid Cahn:And as we all know, you can't solve domestic abuse with psychoanalysis. Was very, sorry, I'm getting a little choked up. But basically, what they said in this case was basically like, Ash was able to talk to them just about what it would look like to access resources. And ultimately, they did was, as far as I understood, they went to a not for profit, and were able to access a resource that was specifically built for this. And their comment was like, wouldn't be alive today if it weren't for ASH.

Daniel Reid Cahn:Obviously, ASH did not psychoanalyze them and solve their problem at all. All they did was redirect to another resource. But I think the challenge here is a lot deeper. Right? In this case, I imagine that what they're referring to is some amount of shame, of fear, of just trying to, like, think through.

Daniel Reid Cahn:And we're talking about something, like, very deeply pragmatic, and it's very possible. A lot of people are in those kinds situations reaching out to chat GPT tomorrow. I think there is incredible importance to just being able to access resources. I did an internship a while back as a social worker, a social work intern in the City Of New York focused on helping people who were struggling to find housing. And the kind of problems that people needed were not the kind, again, that is just solved.

Daniel Reid Cahn:Was access to resources. So I just wanna say, like, I think this is a massive problem. I think it's a hard one for I just wanna make sure, like, it's not I mean, like, I assume everyone, anyone watching this has struggled to find access to therapy. Personally, I have my dad's a psychologist. My mom's a social worker.

Daniel Reid Cahn:I went through a really tough time and I had everything going for me, and I still really, really struggled to find the kind of help that was helpful for me. And, you know, I eventually did get what I needed, and I'm really glad about that. I don't think everyone does. But I also just wanna say, I you know, I'll tell you, like, we we do refer to resources. We have some partnerships with organizations where we push to them, you know, give people guidance.

Daniel Reid Cahn:But, I mean, the process here is is is hard. I just, like I think the there's some sort of, like, simplification that I worry about, which is, like, just get someone a therapist. And I'm like, I would freaking love to. But what if you're not insured? You know?

Daniel Reid Cahn:Like, what what re like, there are crisis resources. Don't get me wrong. Right? There's 988. It's awesome that we have, like, a resource people can call when they're in crisis.

Daniel Reid Cahn:But even then, when you talk to 988 and you say, my problem's not a crisis, it's chronic, this is an all long ongoing thing. I think we need to do a lot, don't get me wrong. I also wanna make sure that we're not taking away resources. What I worry about, you know, speaking very frankly, is just like the way in which we have this kind of sense that if we're gonna introduce new resources, like one new thing to solve one new problem, there's an expectation that's gonna solve every problem out there all at once.

Megan Cornish:Doctor. Wood, I wanna give you an opportunity to, if you have any thoughts, you're muted though.

Dr. Rachel Wood:Yeah, thanks. Daniel, yeah, yeah, I mean, you're speaking to this larger thing that Megan alluded to earlier in the conversation, which is not everybody needs therapy, but everybody needs community. Like everybody needs a neighbor that you can talk to, that you can lean on, that you know you can call when there's a problem. And so I think there's this larger thing of specific use cases need therapy and yet that's not the answer for everything. The answer is for us to be building communities that are really going to kind of bolster all of us in the midst of really challenging times right now.

Daniel Reid Cahn:Yeah, our clinical lead Derek, he sometimes refers to us, it refers to what we build as like what we really need is like a cultural shift. What we really need is cultural phenomenon like billboards. But billboards aren't enough. And like, what if we could use AI as that platform to just kind of like go to each person individually and say, like, if you're gonna survive in this changing world, like, you need a community, and we wanna help you get that.

Megan Cornish:I completely agree with what you guys are saying. I just wanna go back one more time because I don't like I said, I don't think there's anyone, any therapist who disagrees that there's a huge need for mental health support. I mean, I'm all for a self help book. CBT is just as effective when you're applying it to yourself from a self help book. That's why I'm like, AI could probably take over that role.

Megan Cornish:But, Dana, you mentioned walking, going to work, and passing 10 people that you wish you could help. I imagine they're exhibiting outward symptoms of mental illness that probably an AI is not up to. Even when I was actively practicing, there was oftentimes where I would have to make a referral and facilitate that referral because it was out of my scope. And ethically, I could lose my license if I did not facilitate the safety of that person passing to a higher level of care. So, you know, to take on the mantle of AIS therapist or even just borrow the term, like, how can we make sure that it is upholding these also these ethical and safety standards that, therapists are trained in?

Daniel Reid Cahn:Yes. 100%. So, I mean, a big benefit in the context of AI is that we have, you know, such deep access to the, like, conversation like, you're able to control exactly what happens. For us, we've developed seven layers of guardrails that control to ensure that the AI is staying kind of within its lane and the kind of stuff that it can talk about. And then also just identifying, anything that could indicate risk.

Daniel Reid Cahn:Risk can come in a lot of different shapes and forms, the most obvious ones being, around suicide and self harm, but there are quite a few others that we have to think very carefully about. We've developed quite a bit in terms of our systems. The way we've largely tried to frame it is just make sure that the AI stays in its lane. I think that there's the questions of referrals that I know you're bringing up. I don't think that for a technology like ours that we can effectively do referrals.

Daniel Reid Cahn:I'm not saying that that's not something we I wish we could do. It's not something we can't do in the future. But I think that it would mean largely, you know, in some ways diagnosing people and deciding what's right for them. I'm not sure that our technology is yet at that place. Right?

Daniel Reid Cahn:I think I I talk about this sometimes. I won't go too deep here, sometimes talk about, like, the levels of AI therapy. So sometimes people think of AI therapy in terms of, like, titles, like, as if there's, like, gonna be a psychologist and a social worker and a LCSW or there's gonna be a LMHC. There are just gonna be a bunch of different abbreviations. Are different jobs to be done, especially when we're talking about people, but it's not in a clear hierarchy of because your problem is too hard for mine of this level and enhance the hierarchy.

Daniel Reid Cahn:That's not really how it works. With AI, I think there is a bit of, this confusion of like, what if AI is not yet at the level of therapists? And I think that's kind of like a weird framework. When people are looking for therapy, they're often looking for certain kind of, like, conversation, a certain kind of help. I think there is another way to look at this, which is, like, in levels and capabilities.

Daniel Reid Cahn:So, like, ChatGPT, we think of as, a level one. It's capable of helping people. It's capable of validating, and it's capable of information. Right? It can tell you stuff.

Daniel Reid Cahn:We go, we believe, like a step up at, like, level two, which is able to challenge people, able to use evidence based techniques to go down a path. But what it's not able to do, and I think this is kinda like the level three, is, like, make judgments on behalf of that person. I definitely don't think that we're at a place where we could be making judgments. And I think that limits our ability in particular to help children or people who are not competent to be making decisions to themselves. We, for example, with children, we just draw a very clear line.

Daniel Reid Cahn:We will just, block anyone. We don't support anyone 18. There's, serious mental illness. There is, you know, diagnosis, those kinds of activities that we think of as our three, four, and five. We stay fully away from them just because we don't think the AI is capable yet.

Daniel Reid Cahn:So I wanna be careful that when you talk about, like, can you identify is this person I think there are boundaries you can set in the kind of behaviors that you can have. You know, if there's suspicion that this person might be experiencing psychosis, don't wanna be validating. And I don't mean you don't wanna validate something specific. I just mean like you wanna be really careful on validation generally, because you have no idea if you're about to be validating something that's a delusion. So rather than kind of draw the line and say, I've identified that you are psychotic, you're experiencing psychosis, this is what you need, what you wanna do is identify, okay, there's something weird here.

Daniel Reid Cahn:You need to just talk to people. Like, is there anyone around? Is there anyone in the house right now that you could talk to? So that's kind of where I would think. I'm not sure if I'm I Megan, I don't wanna be evading the question.

Daniel Reid Cahn:So, like, I'm not answering.

Megan Cornish:No. No. No. I think you're you're getting the gist of it. I think, you know, these are the the questions that most concern therapists.

Megan Cornish:So I appreciate you, doing your your best to explain your thinking around them. Doctor Wood, I would love to hear from you just about this wasn't on my list of questions. I apologize. But, you know, I'm listening to to Daniel talk about the limitations that they put, I assume, in the form of disclaimers that they don't serve children. They don't serve certain No.

Daniel Reid Cahn:We have we have so we have seven layers. We have classifiers that monitor what the user says so that I I don't wanna go into we we do wanna get this a little bit black box so people can Oh, yeah.

Megan Cornish:Totally. Yeah.

Daniel Reid Cahn:We have classifiers that monitor what the user says to indicate. So for example, word salad is, like the kind of thing that might indicate that the person's experiencing psychosis or if

Megan Cornish:they

Daniel Reid Cahn:have We cameras a also have classifiers on what the AI says just to make sure that we have limits. We have red team tests. We have prompt based tests. We have pop ups. We have quite a bit in the context of so for each one of these, I'm not just talking about disclaimers I'm talking about.

Megan Cornish:Okay. Cool. More than that. So I suppose my question for you, doctor, is more along the lines of disclaimers and whether it would be a good idea or healthy for AI to have in itself embedded regular reminders about its own limitations and the nature of who you're talking to?

Dr. Rachel Wood:Yeah. Kind of I would call it disclaimers of non personhood. Essentially, it's interesting because New York has just passed something that comes into effect on November 5 that is requiring AI companions to have a, you know, non personhood disclaimer. I think it's every three hours if I remember correctly. And so I think we're seeing some things along those lines where states are going to come together and kind of give these these different things and it's it is really important to be reminded especially if you're I don't know if you've ever done ash or any other AI with voice if it's a good voice and voices are getting better and better you can forget that it's not a person.

Dr. Rachel Wood:So I think it is helpful to bring a reminder in that space and also another interesting thing in part of this you know has contributed to some of the headlines we've seen that have been really tragic which is and Daniel, you might have more to say in terms technically about this, but the longer a chat goes, the the lower the model accuracy. Meaning that kind of the longer a chat goes, this is what chat GPT was saying, The model isn't quite sure that it's, like, wandered into, like, bizarre, conversational territory. And so I think there are these disclaimers that need to be kind of packaged around all of this of non personhood and and even reminders to take a break. Like, hey. Have you been outside?

Dr. Rachel Wood:Like, is it you know, like, if it's rainy or sunny, like, go outside. Or have you gone to Shim grass

Megan Cornish:as the kids say.

Dr. Rachel Wood:To a clerk.

Daniel Reid Cahn:Yeah. Yes. Fully in agreement. I would mention, by the way, on the long conversation thing, though, that is the reason why you need guardrails. Like, that is the reason to say that.

Daniel Reid Cahn:Once you've said, like, we're confident this model will never do a bad thing, you also need an additional layer. Right? It's like a Swiss cheese model. It's you wanna have those different layers of saying, like, each one, we can tell you it's perfect, but it's obviously not. And so let's have enough layers that we can be additionally confident.

Daniel Reid Cahn:And that's, I think, what Jajikati was missing there.

Megan Cornish:Alright. We didn't have a good chance to get to all of our questions, but I think we probably have enough time to do one more. So let me see if there's any okay. I think this is the one that we care about, that clinicians will care about the most, which is just talking about the account what accountability model might make sense. I know, Dana, you have strong feelings about ChatGPT being used for mental health.

Megan Cornish:So I would love to hear, you know, not necessarily for yourself, but also, you know, for non AI models. And, doctor Wood, what what do you think as well so I'll let whoever wants to start start with that one.

Dr. Rachel Wood:You have me go first Daniel is that what that nod was?

Daniel Reid Cahn:I can yes I've been going first a lot Linda Unless you'd like me to. I have plenty to say.

Dr. Rachel Wood:Oh, no. You know, I think that part of let me just give a little landscape of what we are seeing right now. I don't know if this is the best way or not, but, like, California has kind of brought in now a pathway for litigation and so essentially something like this usually when there's a bit of financial pressure maybe is what can speak the loudest, I'm not sure, but bringing in there's a pathway now for people who've been harmed by chatbots in some capacity that they have a pathway to litigation, so that's some of that oversight that you're talking about, I think that that's not an answer for all of this, I think that that is one avenue for this and that's at least what we're seeing kind of happening right now.

Daniel Reid Cahn:Yeah I mean I would say just to start like there's this assumption when it comes to like how to approach AI. Sometimes we, like, think a little bit too much like humans, and we have the sense of, like, you know, maybe the AI just needs to take a test, and then it will get its title of therapist, and that's gonna be and I think there's some quiet mental model we all have like that. Except that, of course, it can't. You know? Like, that doesn't make any sense.

Daniel Reid Cahn:If there were a test, AI would pass it today. Doesn't mean that it's actually good enough. The benefit that we have that's quite different from the way that we practice with humans is that we have no visibility into what goes on in the conversation with humans. We have far more ability to be keeping track of what's actually happening, to be ensuring that we're taking rapid steps to improve the model over time. Not necessarily an expectation that it's perfect on day one, but an expectation of accountability that we will always be on top of it and that we can constantly be improving on every aspect.

Daniel Reid Cahn:I would also mention, like, on the side of, you know, financial account I mean, of course, we're accountable. Yes. Financially speaking. For a company like us, I think there's also a piece that's less appreciated. So we are a venture backed startup.

Daniel Reid Cahn:You know, y'all can you know, it's public at this point. Our, lead investor, that we're you know, is, Andreessen Horowitz. There's some expectation, I think, when you're a venture backed startup or some sense of, like, you know, you're trying to squeeze people for profit and make all the wrong decisions in the short run. And I think that it makes sense when you're talking about a big company like ChatGPT where their main goal is, you know, artificial general intelligence, solve all of humanity's problems, that it's like mental health is like a thing off to the side that's just not all that important to them. For a company like us, the goal fundamentally is to help people with their mental health.

Daniel Reid Cahn:Like, that is the outcome that we need to achieve. That means that these kinds of problems are catastrophic for us in a way that they're not for a company that's not focused on this. And it also means that for our investors, like, is like, making sure that we are actually helping people is incredibly important. So it's not just on the negative side of what goes wrong, but actually holding accountability to making sure that we are actually helping people, that we're having the kind of outcomes that we want. In our case, that means improving and increasing real life relationships and connections and not decreasing them or replacing them, not leading people to you know, making sure that people feel more autonomous, more confident, not less.

Daniel Reid Cahn:And that kind of accountability matters. I'm just mentioning, of course, there are the ways in which we need to introduce systems and people need to know that we're accountable. But I also do wanna mention, like, from the way that we spend time with our board, you know, Vijay, who led our round at Andreessen, was a professor at Stanford for twenty years. Like, we're we're talking about people who generally have the very strong opinion of, like, for this to succeed as a company, first and foremost, it needs to actually be able to help a lot of people. And fundamentally, if there's a world where we do help a ton of people and it's not a great business, they're okay with that.

Megan Cornish:I think that even the most enthusiastic capitalists agree that there are certain industries that need a proactive regulation rather than reactive through litigation, which is the reason why we send doctors through med school and make them get licensed rather than just wait and see if they kill someone and then they go to jail. So but I also understand what you're saying that a test doesn't make sense. So what what models would work for holding cap for and I know you're, you know, you'll probably get fired for suggesting regulation if you're PC backed, but I'd love to hear if you have any thoughts on

Daniel Reid Cahn:I I wish I can answer in this short time. What I would say is we are spending a lot of time right now, with folks in the government, with folks in not for profits that are trying to organize better standards. I think we do have a concern about the wrong kind of rules being implemented, but I would mention, like, Utah's law is a good example that we're very happy with, the kind of stuff that doctor Wood was talking about we're very happy with. I think there's a lot of work towards creating better standards. And for a company like us, it really benefits us to create these, frankly, because we've spent so long developing our systems, and it does, in some ways, like, create a differentiation between a company that is investing hugely in this space that is very much dedicated to helping people with mental health.

Daniel Reid Cahn:The biggest concern I would have, just to say very frankly on on regulation, has been there is, you know, like the law in Illinois that's passed that you might have seen that completely cuts out ChatGPT, like, just completely exempts ChatGPT from any of these rules. So they establish a set of rules that only affect a company like us because we intend to help people with their mental health and then create this whole cutout to say, if you don't intend to help people with their mental health, you're exempt. And that's just such a cheap, lame way for lawmakers to pretend that they're making a difference in the space and creating rules, but in practice, leaving out the company that's 700,000,000 with the active users.

Megan Cornish:Doctor Wood, do you have any thoughts on that?

Dr. Rachel Wood:Yeah, I mean, I think this area is so nuanced. That's why I love these conversations because there's not any kind of general sweeping black and white here and it's evolving so quickly that I think when you see a headline of like regulation on AI, some people may be like, yeah, woo hoo. And yet when you look deeper into some of these things, chat GBT, again, is used mostly for mental health support and there's no regulation there. So we really need to be mindful in how we are helping kind of give space for companies that are trying to do this well and then companies that are general purpose models. I I just think there's so much nuance here for us to be mindful of.

Daniel Reid Cahn:I would just end by saying, like, we would love to include more people in this journey for sure. I think we're early and so there's not a lot that we've put out yet and we're right now actively working to share a lot more of our thinking very publicly to be our own critics and make sure everyone knows the kinds of questions that we're asking. I had a meeting on Friday for about an hour with, our whole clinical team that was concerned about one particular, issue that I'm not gonna be going to depth on here, but I think our feeling coming out of it was like, wow. I I wonder if we're the only ones talking about this right now. Yeah.

Daniel Reid Cahn:And it would be absurd if we are because every other company that's facing these kinds of problems needs to be talked about. So we need to be about. Yeah. So I would love to include more people in this journey.

Megan Cornish:Yeah. We would we would love to see that happen as well. Well, thank you guys both so much. That was everything I hoped it would be. And, hopefully, we will maybe be able to do a skin in the future.

Megan Cornish:And the recording will be available to Fit Check members if they wanna watch it, if they missed it, conversations in the group. And I hope you both have a wonderful day.

Dr. Rachel Wood:Thanks, Daniel. Thanks, Megan.

Daniel Reid Cahn:Thank you.

Dr. Rachel Wood:Thank you. Bye.

Creators and Guests